Clinical Risk Categories for AI Use

A practical framework for deciding when AI assistance is appropriate—classify tasks by risk level and apply the right level of oversight.

Not all clinical tasks carry the same risk. Using AI to draft a patient education handout is very different from using it to calculate a chemotherapy dose. Yet many doctors treat all AI assistance the same way—either avoiding it entirely or trusting it blindly.

This guide gives you a practical framework to decide: When should I use AI? And how much oversight do I need?

Think of it like triaging tasks instead of patients. Some need immediate senior review; others your intern can handle independently.

What Problem This Solves

Without a clear framework, doctors either:

- Over-rely on AI for high-stakes decisions, risking patient safety

- Under-use AI for safe tasks, wasting time they could save

- Feel constant anxiety about whether they’re using AI appropriately

You need clear boundaries. Which tasks are safe to delegate to AI with minimal review? Which need careful verification? And which should AI never handle alone?

This risk classification framework solves:

- Decision paralysis about whether to use AI for a specific task

- Inconsistent practices within your clinic or team

- Wasted time over-checking low-risk AI outputs

- Patient safety gaps from under-checking high-risk outputs

- Medico-legal uncertainty about appropriate AI use

How to Do It (Steps)

Step 1: Identify the Task Category

Before using AI, ask yourself: What type of task is this?

| Category | Examples |

|---|---|

| Administrative | Scheduling, formatting, letter templates |

| Educational | Patient handouts, health information |

| Documentation | Clinical notes, discharge summaries |

| Clinical Decision | Diagnosis, treatment, dosing |

| Emergency | Acute care decisions, triage |

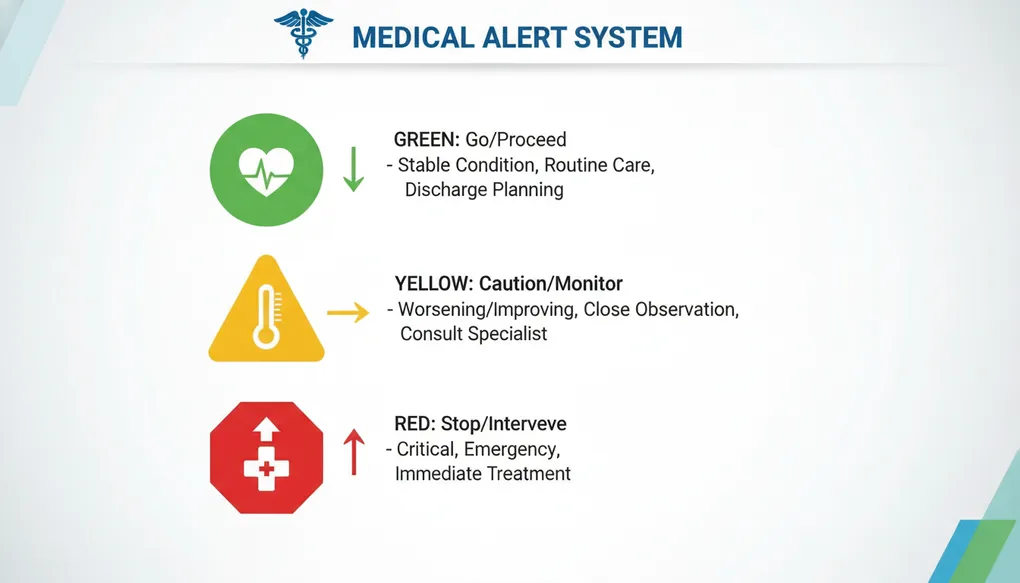

Step 2: Apply the Traffic Light System

GREEN (Low Risk) — Proceed with standard review

- No direct patient care impact

- Errors are easily caught and corrected

- AI output is a starting point, not final product

YELLOW (Medium Risk) — Proceed with careful verification

- Indirect patient care impact

- Errors could cause confusion or delays

- Requires clinical review before use

RED (High Risk) — Proceed with extreme caution or avoid

- Direct patient care impact

- Errors could cause harm

- AI should assist, not decide

Step 3: Match Oversight Level to Risk

| Risk Level | Oversight Required | Before Using Output |

|---|---|---|

| GREEN | Quick scan | Skim for obvious errors |

| YELLOW | Line-by-line review | Verify all clinical content against references |

| RED | Independent verification | Cross-check with guidelines, consult if uncertain |

Step 4: Document Your Verification

For YELLOW and RED tasks, make a mental note (or actual note) of what you verified. If something goes wrong, you need to show you exercised clinical judgment, not blind trust.

The Risk Classification Chart

GREEN Zone: Low Risk Tasks

Safe for AI with standard review

| Task Type | Examples | Why It’s Low Risk |

|---|---|---|

| Formatting & Admin | Converting notes to table format, organizing information | No clinical content generated |

| General Health Education | ”What is diabetes?” explainer for waiting room | Generic information, not patient-specific |

| Scheduling Content | Clinic timing announcements, holiday notices | No medical decisions |

| Translation Assistance | Converting English handout to Hindi structure | You verify the final translation |

| Research Summaries | Summarizing a paper’s methodology | You read the original for clinical conclusions |

| Email/Letter Templates | Referral letter format, insurance letter structure | You customize with patient details |

Green Zone Prompt Pattern:

Create a [FORMAT] for [GENERAL PURPOSE]. This is for [CONTEXT].

Keep it [CONSTRAINTS].YELLOW Zone: Medium Risk Tasks

Use AI but verify everything clinical

| Task Type | Examples | Why It’s Medium Risk |

|---|---|---|

| Patient Instructions | Post-procedure care, medication timing | Errors could affect recovery |

| Documentation Drafts | Discharge summary structure, referral letters | Will become part of medical record |

| Communication Templates | Explaining test results, condition counseling | Affects patient understanding |

| Differential Diagnosis Lists | ”What else could this be?” brainstorming | Could miss or suggest wrong diagnoses |

| Drug Information | Side effects, interactions (general) | Must verify against current references |

| Protocol Drafts | Clinic workflows, checklists | Will guide clinical decisions |

Yellow Zone Prompt Pattern:

I'm a [SPECIALTY] doctor. Draft [DOCUMENT TYPE] for [CONTEXT].

I will review and verify all clinical content before use.

Include [SPECIFIC ELEMENTS]. Flag any areas where I should

double-check current guidelines.Critical Yellow Zone Rule: Always tell the AI you will verify, and always actually verify.

RED Zone: High Risk Tasks

AI assists your thinking only—never decides

| Task Type | Examples | Why It’s High Risk |

|---|---|---|

| Diagnosis | ”What does this patient have?” | Could miss serious conditions |

| Treatment Decisions | ”What drug should I prescribe?” | Wrong choice causes direct harm |

| Medication Dosing | Drug doses, especially for children/elderly/renal impairment | Calculation errors can be fatal |

| Emergency Protocols | Acute MI management, anaphylaxis treatment | Seconds matter, errors kill |

| Prognosis Discussions | Life expectancy, outcome predictions | Profound impact on patients and families |

| Medico-Legal Content | Consent forms, death certificates, legal reports | Legal consequences for errors |

Red Zone Prompt Pattern:

I'm considering [CLINICAL QUESTION] for [ANONYMIZED PATIENT CONTEXT].

I will make the final decision based on my clinical judgment and

current guidelines. Help me think through:

- What factors should I consider?

- What am I potentially missing?

- What questions should I be asking?

Do NOT give me a definitive answer—help me think, not decide.Critical Red Zone Rule: AI is a thinking partner, not a decision-maker.

Example Prompts (2-5)

Example 1: GREEN Zone — Clinic Signage

Create a simple sign for my clinic waiting area explaining that

wait times vary based on patient needs, not arrival order.

The tone should be polite and understanding. Include a line

in Hindi. Keep it under 50 words total.Why GREEN: No clinical content, easily verified, no patient harm possible.

Example 2: YELLOW Zone — Post-Procedure Instructions

I'm a general surgeon. Draft post-operative care instructions

for a patient who underwent laparoscopic cholecystectomy.

Patient is a 45-year-old teacher who needs to know when

she can return to work.

Include:

- Wound care instructions

- Diet progression

- Activity restrictions with timeline

- Warning signs requiring immediate attention

- Follow-up timing

I will review all clinical content against my standard protocol

before giving this to the patient. Flag any recommendations that

may vary based on individual patient factors.Why YELLOW: Direct patient impact, but you’re verifying before use.

Example 3: YELLOW Zone — Differential Diagnosis Brainstorming

I'm a physician in Lucknow. A 35-year-old man presents with:

- Fever for 7 days

- Headache

- Abdominal pain

- Relative bradycardia

It's September (post-monsoon).

Help me brainstorm differential diagnoses I should consider

for this presentation. I'll evaluate each based on my clinical

examination and appropriate investigations.

For each possibility, suggest one distinguishing feature

that would help me narrow down the diagnosis.Why YELLOW: Helps thinking but doesn’t make the diagnosis. You’re using it as a checklist, not an answer.

Example 4: RED Zone — Medication Dosing (Appropriate Use)

I'm calculating gentamicin dosing for a patient with reduced

renal function. I will use standard references and formulae

for the final calculation.

Help me think through:

- What patient factors affect gentamicin dosing?

- What formula or nomogram options exist?

- What monitoring parameters should I plan for?

- What are common dosing errors I should watch for?

I will verify the actual dose using approved clinical resources

and my hospital's protocol. Do not give me a specific dose—

help me approach the calculation correctly.Why RED handled correctly: You’re asking for guidance on approach, not the answer. The final dose comes from verified sources.

Example 5: RED Zone — What NOT to Do

DANGEROUS PROMPT (Never use):

What dose of methotrexate should I give a 60-year-old patient

with rheumatoid arthritis and eGFR of 45?Why dangerous: Asking AI to make a dosing decision for a high-risk drug in a patient with renal impairment. Even if the AI gives a reasonable answer, you’ve delegated a RED zone decision.

Bad Prompt → Improved Prompt

Scenario: You need help with a complex diabetes management case

Bad Prompt:

“My diabetic patient’s sugar is uncontrolled despite being on metformin and glimepiride. What should I add?”

What’s wrong:

- Asks AI to make treatment decision (RED zone treated as GREEN)

- Missing critical context (current doses, HbA1c, complications, contraindications)

- No verification layer mentioned

Improved Prompt:

I'm managing a complex diabetes case and want to think through

my options systematically. Patient is a 58-year-old man, BMI 32,

on Metformin 1g BD and Glimepiride 2mg with HbA1c still 9.2%.

No cardiac or renal disease. Can afford branded medications.

Help me think through:

1. What classes of drugs could I consider adding, and what are

the pros/cons of each in this specific patient?

2. What patient factors should influence my choice?

3. What should I assess before making a decision?

4. What questions should I ask the patient at the next visit?

I will make the final prescribing decision based on clinical

examination, current guidelines, and patient preference.

Do not recommend a specific drug—help me think through the decision.Why it’s better:

- Acknowledges this is a clinical decision (RED zone awareness)

- Provides context for relevant thinking

- Asks for thinking framework, not answer

- Explicitly states human decision-maker role

Common Mistakes

1. Treating All Tasks as Equal Risk

Mistake: Using the same quick-check approach for a patient handout and a drug dose.

Fix: Consciously categorize every task before using AI output.

2. Assuming AI “Knows” to Be Careful

Mistake: Expecting AI to flag when it’s uncertain or when stakes are high.

Fix: AI will confidently give wrong answers for RED zone questions. The safety classification is your job, not the AI’s.

3. Yellow Zone Creep

Mistake: A YELLOW task (draft discharge summary) gradually becomes GREEN (using it without review because “it’s always right”).

Fix: Maintain verification discipline. Every YELLOW output needs review, no matter how good AI seems.

4. Using AI to Avoid Uncertainty

Mistake: Using AI for RED zone tasks because you’re uncertain and want an answer.

Fix: Uncertainty in RED zone = consult a colleague or reference, not AI. AI amplifies confidence, not accuracy.

5. Not Mentioning Verification in Prompt

Mistake: Asking AI for clinical content without signaling you’ll verify.

Fix: Including “I will verify” in prompts often makes AI more careful and more likely to flag uncertainties.

6. Forgetting Gray Areas Default to Higher Risk

Mistake: Convincing yourself a borderline task is GREEN when it might be YELLOW.

Fix: When in doubt, treat as higher risk. Over-checking wastes time; under-checking risks harm.

Clinic-Ready Templates

Template 1: Risk Assessment Before Any AI Use

Before using AI, answer these three questions:

1. TASK: What am I asking AI to do?

[ ] Administrative/Formatting

[ ] Patient Education

[ ] Clinical Documentation

[ ] Clinical Decision Support

[ ] Emergency/Acute Care

2. RISK LEVEL: What's the potential harm if AI is wrong?

[ ] GREEN: Easily caught, no patient impact

[ ] YELLOW: Could affect care, needs verification

[ ] RED: Direct harm possible, AI assists only

3. VERIFICATION PLAN: How will I check this output?

[ ] GREEN: Quick skim before use

[ ] YELLOW: Line-by-line clinical review

[ ] RED: Cross-reference guidelines, independent calculationTemplate 2: Yellow Zone Documentation Draft

I'm a [SPECIALTY] doctor. Create a draft [DOCUMENT TYPE] for:

Patient context: [AGE, GENDER, CONDITION—no identifying details]

Purpose: [Why this document is needed]

Key points to include: [List main clinical points]

Format as [STRUCTURE NEEDED].

IMPORTANT: I will review all clinical content before use.

Please flag:

- Any recommendations that may vary by patient

- Areas where guidelines may have recently changed

- Content that requires verification of current drug availabilityTemplate 3: Red Zone Thinking Partner

I need to think through a clinical decision. Help me consider

the relevant factors WITHOUT making the decision for me.

Clinical situation: [ANONYMIZED SUMMARY]

Decision I'm considering: [WHAT YOU'RE THINKING]

My current reasoning: [WHY YOU'RE LEANING THIS WAY]

Help me by:

1. What factors support this decision?

2. What factors argue against it?

3. What am I potentially not considering?

4. What would a senior colleague likely ask me?

I will make the final decision based on clinical judgment,

examination findings, and established guidelines.Template 4: Team Risk Framework Discussion

Help me create a simple one-page guide for my clinic team on

when to use AI assistance and when to avoid it.

Our clinic context: [SPECIALTY, SETTING, PATIENT POPULATION]

Team members who might use AI: [DOCTORS/NURSES/STAFF]

Create a simple decision tree with:

- Tasks that are safe for AI assistance (GREEN)

- Tasks that need doctor verification (YELLOW)

- Tasks where AI should not be used for decisions (RED)

Keep it practical and specific to our context.

Make it printable for display in our workstation area.Safety Note

This framework is about appropriate use, not avoiding AI entirely.

The goal is to harness AI’s benefits (saving time, reducing cognitive load, improving consistency) while protecting against its risks (confident errors, outdated information, missing context).

Key principles:

-

AI doesn’t know what it doesn’t know. It will give confident answers even when wrong.

-

Stakes determine oversight. Low stakes = standard review. High stakes = independent verification.

-

You are always responsible. “AI told me” is not a legal defense. The clinical decision is yours.

-

When in doubt, escalate the risk category. Treating a YELLOW task as RED wastes a few minutes. Treating a RED task as YELLOW could harm a patient.

-

Your verification is the safety layer. AI doesn’t have your examination findings, your knowledge of this specific patient, or your clinical intuition.

Never use AI for:

- Final medication doses without independent calculation

- Emergency treatment decisions in real-time

- Definitive diagnosis without clinical correlation

- Content for medico-legal documents without expert review

- Any decision where you can’t explain your reasoning independently of AI

Copy-Paste Prompts

For GREEN Zone: Administrative Content

Create a [NOTICE/SIGN/TEMPLATE] for my clinic about [TOPIC].

Keep it professional and clear. Include Hindi translation if

appropriate. This is for [WAITING ROOM/RECEPTION/STAFF AREA].For GREEN Zone: General Health Education

Create a simple one-page explainer about [CONDITION/TOPIC]

for patients in my waiting room. Use simple English appropriate

for someone with 10th standard education. This is general

information, not patient-specific advice. Include a note that

patients should discuss their specific situation with their doctor.For YELLOW Zone: Patient Instructions (Verify Before Use)

I'm a [SPECIALTY] doctor. Create patient care instructions for

[CONDITION/PROCEDURE] for a [PATIENT CONTEXT].

Include: [SPECIFIC ELEMENTS NEEDED]

I will verify all clinical content against current guidelines

before giving this to my patient. Flag any instructions that

may need adjustment based on individual patient factors.For YELLOW Zone: Documentation Draft

Draft a [DOCUMENT TYPE] for a patient with [ANONYMIZED SUMMARY].

Structure it with: [SECTIONS NEEDED]

I will review and modify all clinical content. This is a

starting framework only. Flag any areas where standard content

might not apply to all patients.For RED Zone: Clinical Thinking Support

I'm working through a clinical decision about [SITUATION].

Don't give me the answer—help me think through it systematically.

What factors should I consider?

What might I be missing?

What questions should guide my decision?

What would make me reconsider my current approach?

Final decision will be based on my clinical judgment and

current evidence-based guidelines.For Team Training: Risk Discussion

I want to discuss AI risk categories with my clinic team.

Create 5 realistic scenarios we might encounter, without

revealing the risk category. We'll discuss and categorize

them together.

Mix of GREEN, YELLOW, and RED scenarios relevant to a

[SPECIALTY] practice in [SETTING] India.

Format as brief case vignettes.Decision Tree: Should I Use AI for This?

Print this and keep it visible:

START HERE

|

v

Does this task involve patient care decisions?

/ \

YES NO

| |

v v

Is it about diagnosis, GREEN ZONE

treatment, or dosing? Standard review

/ \ |

YES NO |

| | |

v v v

RED ZONE YELLOW ZONE PROCEED

AI assists Verify all with quick

thinking only clinical check

| content

| |

v v

Make decision Review before

independently patient useDo’s and Don’ts

Do’s

- Do consciously categorize every task before using AI

- Do state “I will verify” in prompts for YELLOW/RED tasks

- Do treat uncertain categories as higher risk

- Do use AI as a thinking partner for complex RED zone decisions

- Do create clinic-specific examples for each zone

- Do review this framework with your team

- Do update your categories as you learn what AI handles well and poorly

- Do maintain verification discipline even when AI seems reliable

Don’ts

- Don’t ask AI for definitive answers on RED zone questions

- Don’t let GREEN zone efficiency make you complacent about YELLOW/RED

- Don’t assume AI will flag when it’s uncertain

- Don’t use AI to avoid the discomfort of clinical uncertainty

- Don’t copy AI medication doses without independent verification

- Don’t let “it’s usually right” replace systematic checking

- Don’t forget that AI confidence doesn’t equal accuracy

- Don’t share identifiable patient details in any prompt

India-Specific Considerations

Drug Availability Varies

AI may suggest drugs not available in India, or available under different brand names. YELLOW zone for any drug-related content.

Guideline Differences

Indian guidelines (API, ICMR, FOGSI, IAP) may differ from international ones. Always specify “Indian guidelines” and verify against current versions.

Cost Considerations

AI doesn’t know what your patient can afford. Patient instructions and treatment discussions need your input on practical, affordable options.

Regional Disease Patterns

Dengue, malaria, typhoid, tuberculosis, leptospirosis—prevalence varies by region and season. Your local epidemiological knowledge is essential context AI lacks.

Documentation Standards

Medico-legal requirements differ. Any document that could have legal implications (MLC reports, fitness certificates, insurance forms) is RED zone.

Language Nuances

AI can help with Hindi/regional language content but may miss cultural nuances. Verify translations with native speakers when possible.

1-Minute Takeaway

Use the Traffic Light System for every AI task:

GREEN (Go): Admin, formatting, general education

- Quick review is enough

- Low stakes, easily corrected

YELLOW (Caution): Patient instructions, documentation drafts, clinical brainstorming

- Verify all clinical content before use

- You check, then use

RED (Stop and Think): Diagnosis, treatment, dosing, emergencies

- AI helps you think, not decide

- Final answer comes from you + verified sources

The Golden Rule: When in doubt, treat as higher risk.

Quick Classification:

- Will a patient be directly affected? → At least YELLOW

- Could a wrong answer cause harm? → RED

- Is it administrative or general info? → Probably GREEN

Remember: AI doesn’t know what it doesn’t know. Your oversight is the safety layer. Match your checking to the stakes.

The framework in this guide builds on the basics covered in “What Prompt Engineering Is” (A3) and “Your First Clinical Prompts” (C1). As you get comfortable with risk classification, you’ll naturally develop intuition for when AI helps and when it could hurt.